As a statistics instructor in the early part of my career, I know how boring statistics can be (to most of us). However, grasping the fundamentals of statistics is critical for your success as an HR professional. Without it, you cannot capitalize on the huge potential data science offers HR.

In this article, I will decipher HR statistics through storytelling. I will answer 19 questions typically asked by young business and HR managers who are about to start their analytics journey.

Analytics is a combination of the two terms “analysis” & “statistics”. It refers to any analysis driven through the application of statistics. HR analytics is analytics applied to the domain of HR. These analytics can be advanced predictive analytics, or basic, descriptive statistics. This means that HR analytics is a data-driven approach to managing people at work (Gal, Jensen & Stein, 2017).

The world of HR, as well as people in general, can be extremely random. Statistics help to understand, capture, and predict the randomness of our world. It can reduce risk by estimating uncertainties and reveal hidden aspects in day-to-day HR processes. For example, using analytics you can predict who is most likely to leave the organization, estimate whether you are rewarding different employees fairly, and discover biases in your hiring process. Without it, you miss out on a lot of valuable information needed to make better people decisions.

It’s always statistics in one form or another to the rescue!

We wish it were. Unfortunately, statistical modeling can only explain part of the problem. We don’t live in a perfect world. Unlike theoretical math, which deals with direct computations, statistics are about probabilities.

Statistical models are always subject to unidentifiable factors called noise and inherent errors. Noise and errors make our predictions less certain. Having said that, a good statistical model will be invaluable and help to make much better people decisions.

Uncertainties are not bad. They are part of everything we do. The application of statistics reduces uncertainty to their lowest denominator. This provides HR with a robust and accurate decision support system.

Yes, bias! Bias can be a significant factor in any statistical analysis. This could be due to poor sampling, selective information or even lack of adequate data altogether.

Take the popular example of the school kid who took third position in a race. Great job, kid! However, the race only had 3 participants. Perhaps a less than stellar performance after all. This is an example of selective information and could be solved by collecting more information.

In the real world, sampling biases could inject a substantial amount of selective information. It can even be deliberately designed to favor vested parties of interest, rather than representing the facts. This is not very different from accountants who cook up the financial records, isn’t it?

Alright, game on!

Statistics has just three core parts, that’s all. These three musketeers (individually or combined!) can tackle any data-driven solution for HR under the sun!

Nope, they are joined at the hip! Let me clarify all three through a real-world analogy:

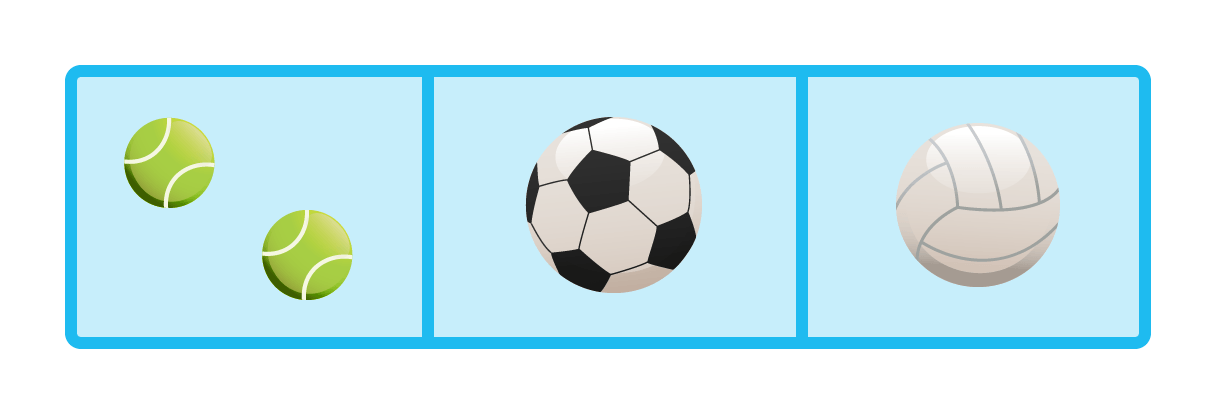

Imagine both of us enter a sports auditorium and find a basket containing 4 sports balls in the middle. In this example, there are two tennis balls, one volleyball, and one soccer ball. We could refer to this as our dataset.

Now both of us, individually, are tasked to group the balls by their circumference. We don’t observe each other’s groupings, of course.

I identify 2 groups based on circumference:

I put them back in the basket. You repeat my exercise and identify 3 groups based on circumference.

Both of us have inadvertently used descriptive statistics when we grouped the ball by circumference. We then compared the groups to the total number of balls and gave our unique solutions. However, the important question is: Why did we group them differently?

Now, this is the essence of inferential statistics.

Nope, that’s the beauty of inferential statistics. Both of us are correct, only our inferences were slightly different. The soccer ball was 98% of the circumference of the volleyball.

For me, this 2% difference in size seemed insignificant. Therefore, I allocated them to the same group. To you, the 2% difference in size did seem significant, which made you allocate the two balls to two different groups.

See how we used the terms ‘significance’ and ‘inferences’ in conversational English? These terms for the backbone of any statistical analysis.

Aha, that is only partly true! The decision can change even with the same level of significance. How? Let’s dig a little deeper and see where the idea of confidence level originates from.

Say, instead of just one soccer and volleyball, we had 10 balls of each type. We would then need to take the average circumference of 10 units (of both types of balls). Now the average of 10 soccer balls comes out to be 99% the circumference of the average of 10 volleyballs. An upgrade from 98%, isn’t it?

Here the 1% difference in size is insignificant to both of us. We, therefore, allocate both balls to the same group. See how our decision changed with a larger sample size? It’s important to note we are also more confident about our inference with a larger sample size. This confidence level is an important term often used in statistics.

Statistically significant outliers

Finally, let’s average 100 units of each type of ball. Now the soccer ball is 100% the average circumference of the volleyball. Now, even the most skeptic among us won’t have any doubts that the soccer ball set and the volleyball set are of the same circumference.

This explains statistics in a nutshell. It’s all about inferences levels (which can be tailored) and how we are more confident about our inferences the larger the sample size.

In our previous example, soccer balls and other types of balls undergo rigorous quality control in their manufacturing. This results in them having minimal variation. People, however, do not undergo “rigorous quality control” in their “manufacturing”. People are a lot more diverse and have a lot more variance between each individual unit.

Both examples, however, approximate a normal distribution in real-life.

Let’s take the analogy of the soccer ball to another dimension using its shape. The distribution of real-life processes (like productivity per employee, or travel distance per day) when plotted on a chart manifest themselves in different shapes. However, because scores tend to stick to the average, they always follow the same pattern. They are more shaped like a rugby ball.

For the simplest explanation possible let’s take look at a sand hourglass.

The fine grains falling through the glass have a mini microns’ difference in size and weight. The pyramid, however, always follows an approximate normal distribution, time and time again (pun intended). Similar to a half rugby ball. This means variance patterns between different units are naturally expected to be within a certain range.

For another explanation of the normal distribution, look at this brilliant YouTube clip. Click the video to start – even though it looks grayed out, it works!

Sure thing! Let’s take two identical pictures of our rugby ball. Then, take two printouts of it and randomly put a hundred dots on both the pictures (roughly covering the entire surface of the image). The images of the rugby balls are identical, but the pattern of dots on both the balls aren’t identical.

Why? Many of the random 100 dots on both the rugby balls have varying distances from the center of the image (mean). Therefore, it’s hard to tell the overall difference without measuring the distances of all the dots.

Now, replace the 2 rugby ball images with 2 recruitment teams of a global recruitment center in HR. Each image, or global recruitment center, has 100 recruiters. Replace the random dots with the recruitment performance of each recruiter.

The exact center of the rugby ball represents the mean performance of each team. Are the performances of both teams equal? It’s the same case. The same rugby ball. The same 100 recruiters. The same overall area. However, because the dots are scattered differently over the rugby balls, the overall performances aren’t necessarily the same.

Yup, spot on! Instead of just one factor (the mean), we now have two factors (the mean AND the variance) that are important.

Comparison between the mean and the variance is the soul of statistics.

The mean (average of all recruiters’ performances) and variance (the distance of each recruiter from the mean). Master these two concepts and you’ve mastered 90% of applied statistics. I mean it (pun intended)!

That’s all there is to it! As you can see, this breaks all statistics down to their 4 lowest denominators.

The exact tests would depend upon the type of insights required for the business. A typical starting point would be a t-test or an ANOVA. ANOVA literally stands for analysis of variance. Both of these check whether there is a significant overall difference between the mean of two groups, such as two HR recruitment teams.

Sure. We select a sample set from our data. Say the performance of 30 recruiters (out of 100) from each team. These are randomly selected, of course, to eliminate any bias.

Now we have the data in terms of average performance (of both the sample and the total) and the variance. That’s all the data we will need.

We apply the t-test formula. It’s a linear algebra jugglery on the mean, the variance and the total number of recruiters. We will explain the logic behind the formula in a next article:

The computation will take about 12 minutes via hand calculations (using a basic calculator) or a few seconds using a statistical analysis software or an Excel macro.

Now the output could be something like: t = -3.73, p = .07.

The t-value is the outcome of the t-test. P stands for probability. In this case there’s a .07 (7%) probability that our findings are random – and a 93% probability that these are actually true (not caused by randomness or chance).

Based on the same report, we could still end up with different answers to our hypothesis. Why?

Because our understanding of the “significance of difference” for the p-value of t-test was different. In other words, your criteria for significance of the p-value was smaller than .10, whereas my criteria for significance was smaller than .05.

The p-value in our test is .07.

In your case, the p-value of the test was lower than your value of significance. This means that, according to you, there was a significant difference in the mean between the two groups.

In my case, the p-value of the test was higher than my own value of significance. Therefore, I don’t think that there is a significant difference between the two groups.

The p-value means the chance that the difference between the two groups comes from random chance. As in, there is no actual difference between the two groups. In our case, there is a 7% chance that the difference between the two groups is due to chance. This was enough for you to conclude that there was a significant difference between the two groups. I had higher standards and thus did not think that this chance was low enough to warrant the same conclusion.

As you can see the inferences (decisions) change with changes in sample size.

The narrower the confidence interval, the more confident we are about our analysis. If our data-set follows normality than its width should inversely correlate with sample size. This follows the central limit theorem. I will cover this and other key aspects of statistical tests in the next part. This part was just to give an overview of the discipline of applied statistics and its relevance to HR.

In module 1, you will learn how statics affect your everyday life and how it can help us in HR. You will learn about simple techniques to understand data better, like the mean, mode, median, and range. You will learn about the spread of data, how this influences your data analysis, and finally, you will learn techniques to clean and visualize data. The first two modules prepare you for the actual analytics in module 3 and 4.

In module 2, you will learn more about methodology. Statistics and methodology go hand in hand. One cannot work without the other. In this module, you will learn about sampling, bias, probability, hypothesis testing, and conceptual models. These are key concepts to understand before you can accurately analyze your data.

In module 3 and 4, you will learn basic and advanced statistical tests. We will start with correlation analysis, t-test, and ANOVA. In the second part, you will learn about linear regression, multiple regression, logistic regression, and structural equation modeling.

We dedicate a full lesson to explaining each of these tests. We will explain how these tests work, when they can be applied, the core assumptions of these test, and we will link you to various resources to execute these tests in the software that you use.

Because these tests can be done using Excel, SPSS, R, or another statistical package, we focus the lessons on a step-by-step process of doing the test which is independent of the software you use. This means that you will have all the information to do this test regardless of whether you’re using R or SPSS.